GenAI is Changing the Attacker Playbook - Here's How.

Introduction

The cybersecurity landscape has always been a high-stakes game of cat and mouse. For every firewall, there’s a hacker trying to breach it. For every patch, a new exploit is already brewing. But now, a new force has entered the game—and it’s rewriting the rules: Generative AI (GenAI).

GenAI isn’t just another buzzword. It marks a fundamental shift in how attackers operate. What used to require technical expertise and time can now be done faster, smarter, and at scale—with AI doing the heavy lifting.

Here are 5 ways we see cyber criminals leveraging GenAI to supercharge social engineering:

1. Hyper-Realistic Phishing Emails

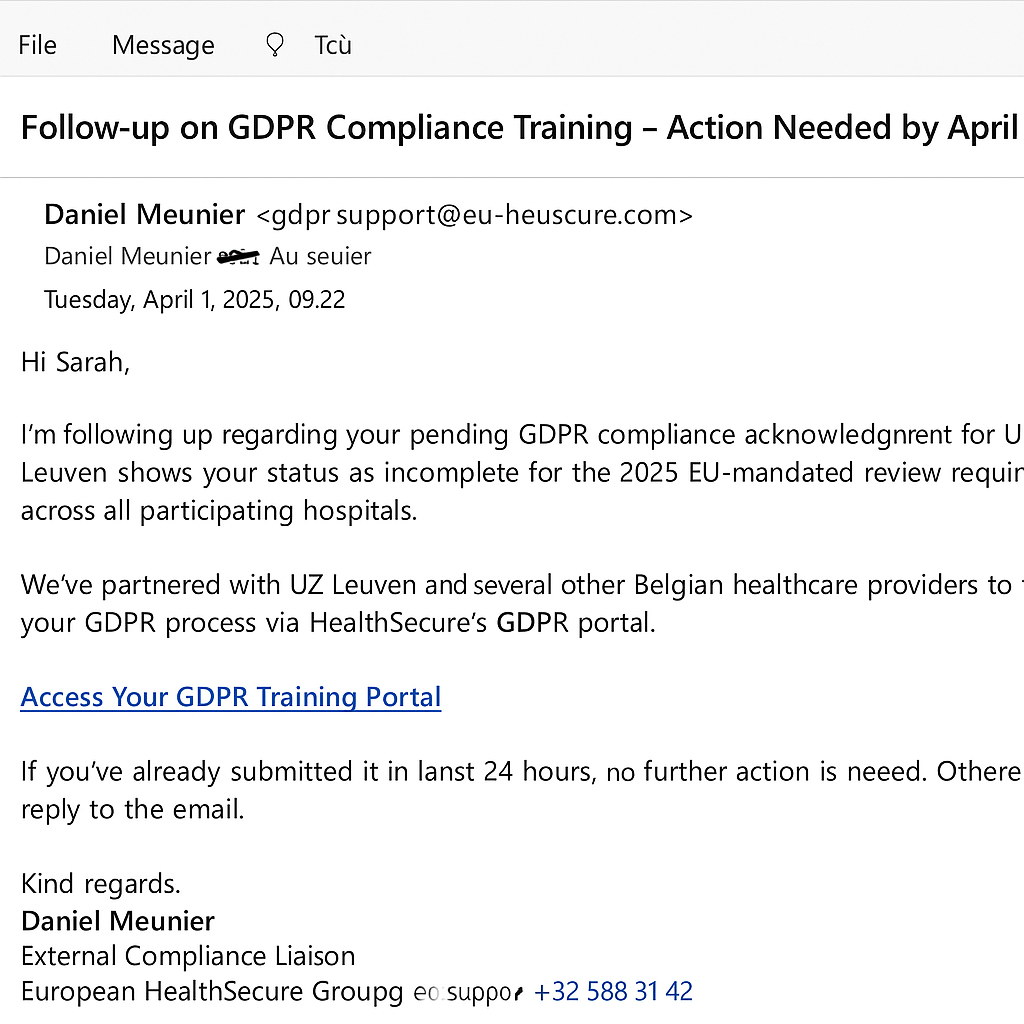

At first glance, this email looks harmless — even routine. Could you tell it’s a phishing attempt? Most people couldn’t. Aimed at hospital staff, it follows a clear modus operandi. Here’s why it’s a phishing attempt:

- Familiar-looking but external: Mimics a trusted third party, common in EU healthcare.

- Personalized: Uses real names, roles, and systems like KWS and Medibridge.

- Subtle urgency: Mentions “system access restrictions” without causing alarm.

- Convincing domain: eu-healthsecure.com appears official but is entirely fake.

- Reply trap: Invites direct replies to extend the scam or extract more information.

These emails are becoming increasingly realistic — almost indistinguishable to the human eye.

2. Business Email Compromise (BEC)

But it’s not just external email attacks. Since the launch of ChatGPT, a so-called insider threat — Business Email Compromise (BEC) — has surged. These attacks involve phished employee accounts used to impersonate staff and steal funds, causing both financial and operational damage. BEC attacks rose 80% in 2022 and made up 73% of cyber incidents in 2024. A famous case was Toyota in 2019, where a hacker posed as a business partner and tricked employees into sending $37M to a fake account.

AI is accelerating this trend due to two factors: the rise of AI-powered BEC toolkits accessible to low-skilled attackers, and phishing campaigns run by initial access brokers who sell stolen credentials to ransomware groups. As ransomware incidents increase, BEC attacks are also becoming more common.

3. Infiltrating chat and 3rd party tools

Attackers aren’t just changing how they operate — they’re also shifting where they strike. With over 50% of business communication now happening outside email, platforms like Microsoft Teams, Slack, LinkedIn, and tools like Zendesk have become new targets.

Previously, these channels were harder to breach, often requiring complex, multi-step attacks. But generative AI has changed that. Attackers can now craft convincing messages that trick employees or partners into granting access — enabling lateral movement and deeper infiltration.

This isn’t just theory. In January 2025, Sophos reported that threat groups STAC5143 and STAC5777 are exploiting Microsoft Office 365 services like Teams to breach organizations. IT Brew also noted that attackers are abusing Zendesk’s free trials and lack of email verification to create fake company accounts, phish employees, and gain access to sensitive data—posing serious risks to trust and operations.

4. Weaponizing Public Data

The key question is how attackers make social engineering so personalized. The answer lies in the abundance of stolen credentials on the dark web and public data. Kaspersky reports data theft attacks have surged sevenfold in three years. Credentials are sold via subscriptions, aggregators, or exclusive shops—sometimes for just $10 per log file.

Even if your company is protected by strong identity controls and MFA, attackers still leverage public data. With AI, they can quickly scrape LinkedIn to find new hires, then use stolen credentials or craft tailored emails based on social media interests to gain access. Once in, they move laterally toward high-value targets.

Generative AI now reduces this process from hours to seconds—making it nearly impossible to stop without using similarly advanced defenses.

5. Multi-Lingual Phishing at Scale

A final and important shift driven by GenAI is its impact on the language precision used in social engineering attacks. A Harvard study from November 2024 shows that the latest AI models are now just as good as humans at writing spear phishing emails. These models can adjust messages to each person, making older defenses like signature detection—which looks for known patterns or exact matches from past threats—much less effective.

The greatest impact is on non-English speakers, for whom it’s now even harder to spot small mistakes or unnatural language. Legacy tools—built on fixed rules and static algorithms, and often trained primarily on English data—aren’t designed to keep up. They lack GenAI architectures that can continuously learn and adapt to new threats.

Final Thought

GenAI is changing the attacker playbook. Modern threats move in seconds—not days—and spread not only through email but also via chat and third-party tools. To protect against this, we need a GenAI-powered defense that can learn, adapt, and respond just as fast. Unfortunately, it won’t get any easier, which is why the most important step is ensuring your people and assets are properly protected against these evolving threats.